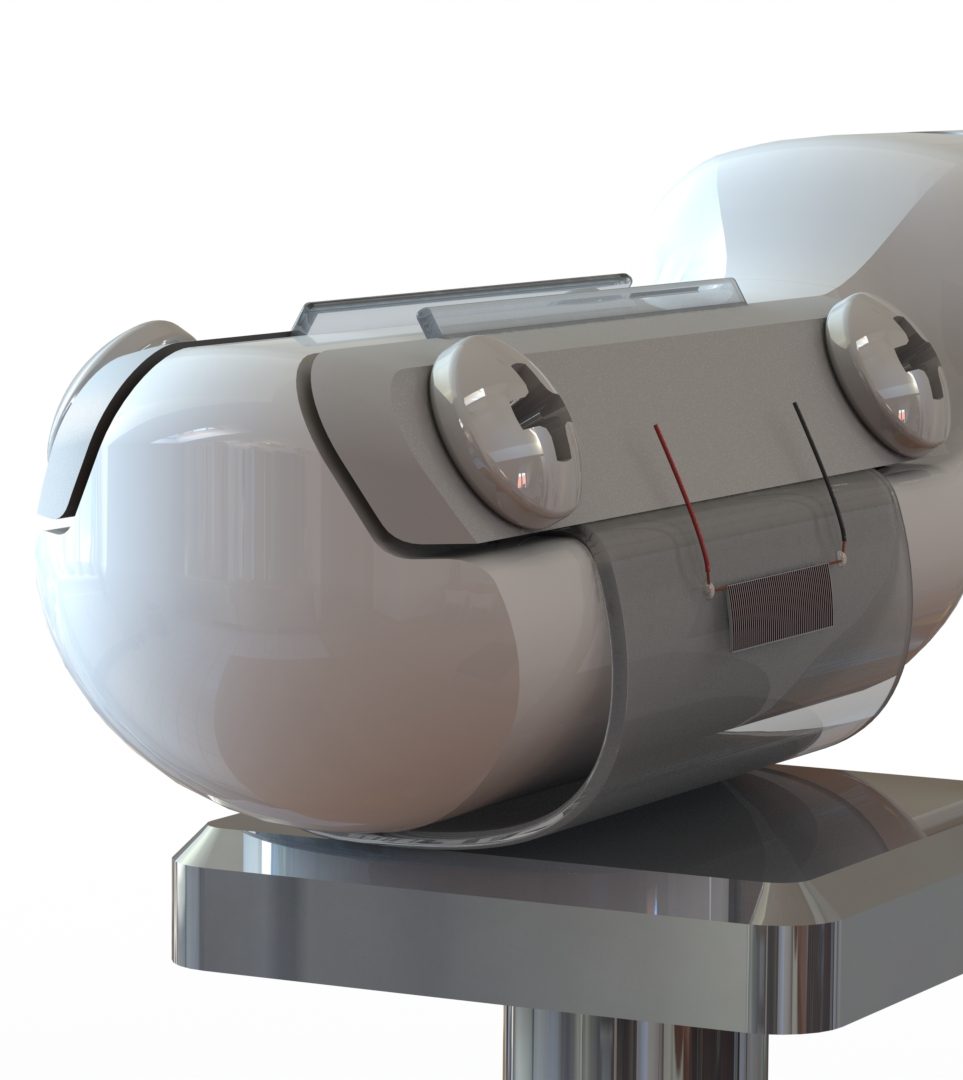

Whether a prosthetic hand is a simple body-powered hook or an advanced anthropomorphic device, it will only be useful and desirable to an amputee if it improves quality of life and is intuitive to control. One way to simultaneously reduce the cognitive burden on the user and enhance the functionality of artificial hands is to empower the prosthesis itself to sense, think, and act. This would free the user to focus on high-level commands as opposed to low-level details that may be frustrating to control or even impossible to control between human and machine. The only way for a semi-autonomous system to gain the trust of its operator is through reliable, context-dependent performance. Such context-aware performance will require information about forceful interactions between the prosthetic hand and everyday objects in unstructured environments that can only be obtained through touch. A flexible, multimodal tactile sensor skin system is to be designed, modeled, fabricated, and tested for artificial fingertips using a multilayer microfluidic architecture.